There are many ways we can empower robots to navigate their environment autonomously. We can equip them with GPS, use an array of cameras and sensors (including smell), even get them to talk with each other and strategize.

But if you want a robot to do all that on its own without using any outside sensors you'll most likely have to use a process known as SLAM, or Simultaneous Localization and Mapping.

SLAM is a remarkable algorithm that enables robots to safely navigate an environment they have no prior knowledge of. However, SLAM has its weak spots -- relying heavily on environments rich in unique geometric features. Let's look at how SLAM operates to capitalize on this revolutionary method of 3D data capture.

To power a SLAM algorithm you need at least 2 sensors. One to track the robot's movements and orientation, the other to "see" the environment it's trying to navigate through. Most modern-day robots come equipped with the first sensor, called an inertial measurement unit, or IMU. This sensor is usually equipped with an accelerometer and gyroscope which help the robot track its movements in 3D space along 6 axes. This is the sensor in your smartphone that understands if it's in portrait or landscape mode.

Once the robot understands its orientation and trajectory, it needs to understand its environment and how to navigate through it safely and efficiently.

Forward-facing Velodyne LIDAR Puck LITE, the "eyes" of the ExynAero

Forward-facing Velodyne LIDAR Puck LITE, the "eyes" of the ExynAero

Enter the robot's "eyes." Our robots use a Velodyne LiDAR Puck LITE sensor to see the world around them. The sensor fires 16 beams slightly angled on a horizontal plane and is rotated in 360º to give the robot an almost complete view of its environment (only obfuscated by the robot itself). The puck fires 300,000 beams every second and captures returns on each point that has a timestamp, intensity rating, and x, y, z coordinate attached. The robot uses this data to create a real-time 3D map of its surrounding environment that we call a point cloud.

As the LIDAR sweeps the area it creates a real-time 3D map

As the LIDAR sweeps the area it creates a real-time 3D map

That's SLAM in a nutshell. You're using onboard sensors to build a map of an unknown environment while estimating where the robot is inside that map. But this is where SLAM forks into two separate branches -- online and offline. Online SLAM, as you can likely guess, is active while the robot is in flight and prioritizes object detection and avoidance over the quality of the map. Whereas offline SLAM takes place after the robot has landed and prioritizes map quality and loop closure now that we don't have to worry about robot control.

Online SLAM is sloppy because it needs to be fast. Offline SLAM can take time to run complex algorithms to align to very specific geometric features.

For the robot to localize its position in the map and maintain its state, we use a feature-based LIDAR Odometry and Mapping (LOAM) pipeline. And this pipeline needs to be fast to keep up with a robot moving at 2 meters/second! As our gimballed LIDAR scans the environment, the LOAM algorithm aggregates this data into sweeps (2 sweeps per gimbal rotation) that it uses to build a fast local map with pose estimates of the robots probable location. With each sweep, LOAM updates the map and motion corrects the sweeps using the IMU data.

Our robots are actually building two maps on the fly (see figure below). One for edges, the other for surfaces. ExynAI is running complex equations on all LIDAR hits and classifying them while also excluding duplicates, stray points (like dust), and unstable edges.

Red points are edges; blue/green points are surfaces; turquoise points are the complete map

Red points are edges; blue/green points are surfaces; turquoise points are the complete map

ExynAI then converts all these points into voxel grids that the robot uses to path plan through the map it's generating. You can think of a voxel like a 3D pixel, or a cube in Minecraft. We have multiple grids for the robot to understand solid objects, a safe flight corridor, and even explorable space. And our pipeline also implements algorithmic decay into these grids so that our robots can detect changes to their environment in real-time. This decay can be tuned for more/less dynamic environments.

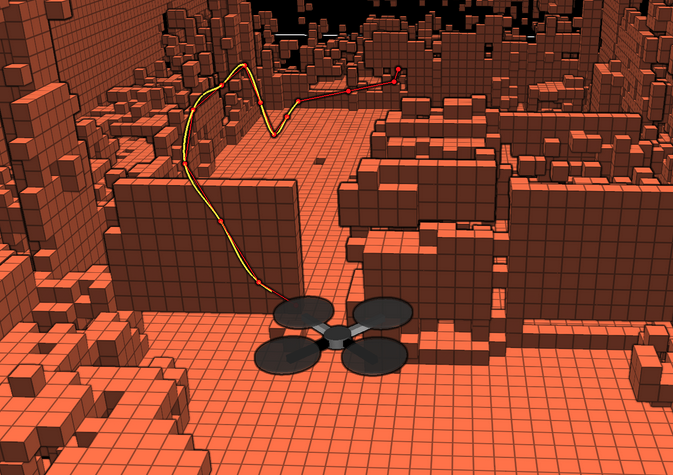

The ExynAero flying through its occupancy grid, detecting dynamic objects

The ExynAero flying through its occupancy grid, detecting dynamic objects

Lastly, our robots "cull" the boundaries of the map they're generating only to focus on their immediate surroundings. This is to keep online mapping fast and efficient. You can see an example of that in the GIF below. The blue area of the map is what's being prioritized while the rest of the point cloud is culled once it's a certain distance away from the sensor.

The turquoise sphere is the fast local map our robots use to navigate safely and efficiently

The turquoise sphere is the fast local map our robots use to navigate safely and efficiently

This all might sound relatively simple, but there's lots of complex math happening in the background to compute surfaces, eliminate stray points, fuse pose estimates with batches of LIDAR sweeps, and millions of other calculations per second to keep the robot safely flying to its next objective. That's how online SLAM can be a little "sloppy", but once the robot's landed we can refocus all the power of ExynAI on refining and constraining a high-density point cloud map.

Now that we've examined how our robots use SLAM to fly autonomously in GPS-denied environments, let's look at how offline SLAM is used to refine overall map quality.

All sensor data captured during each flight is logged and stored onboard the robot, which can get quite large depending on the length of the flight and the complexity of the environment. Our customers generally want the raw point cloud data gathered from our gimballed LIDAR sensor. That's where our post-processing pipeline, ExSLAM, comes in. It extracts the raw cloud from our logs and refines it for 3rd party software.

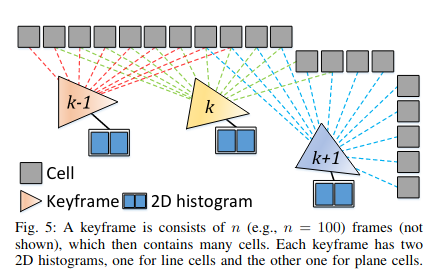

ExSLAM uses a factor graph optimization algorithm to create low-drift point cloud maps. This algorithm takes a series of LIDAR sweeps and stores them as "keyframes" associated with a specific pose state. We can then run an optimization on each keyframe and their neighbors for similar geometric features and use those matches as loop closure constraints, also called a pose graph. This is why it can be difficult to produce accurate maps of featureless environments.

Why is it important for SLAM to "close the loop"? The GIF below is a great representation of a feature-based SLAM/LOAM algorithm complete with loop closure. The red dots represent the robot's pose estimates, and the green dots represent unique geometric features in the environment. You can see the farther the robot travels the more uncertain it is about its pose until it "closes the loop" and recognizes where it started. Then you can see the algorithm constrain those uncertain pose estimates and landmarks with that new knowledge.

The end result is a high-density, low-drift point cloud map that can be easily exported. And the best part? This entire process can be done directly through the same tablet you'd use to control the ExynAero or ExynPak. No need to connect to WiFi or send it to a 3rd party for processing. Maps can also be georeferenced, smoothed, and down-sampled for easy file transfer.

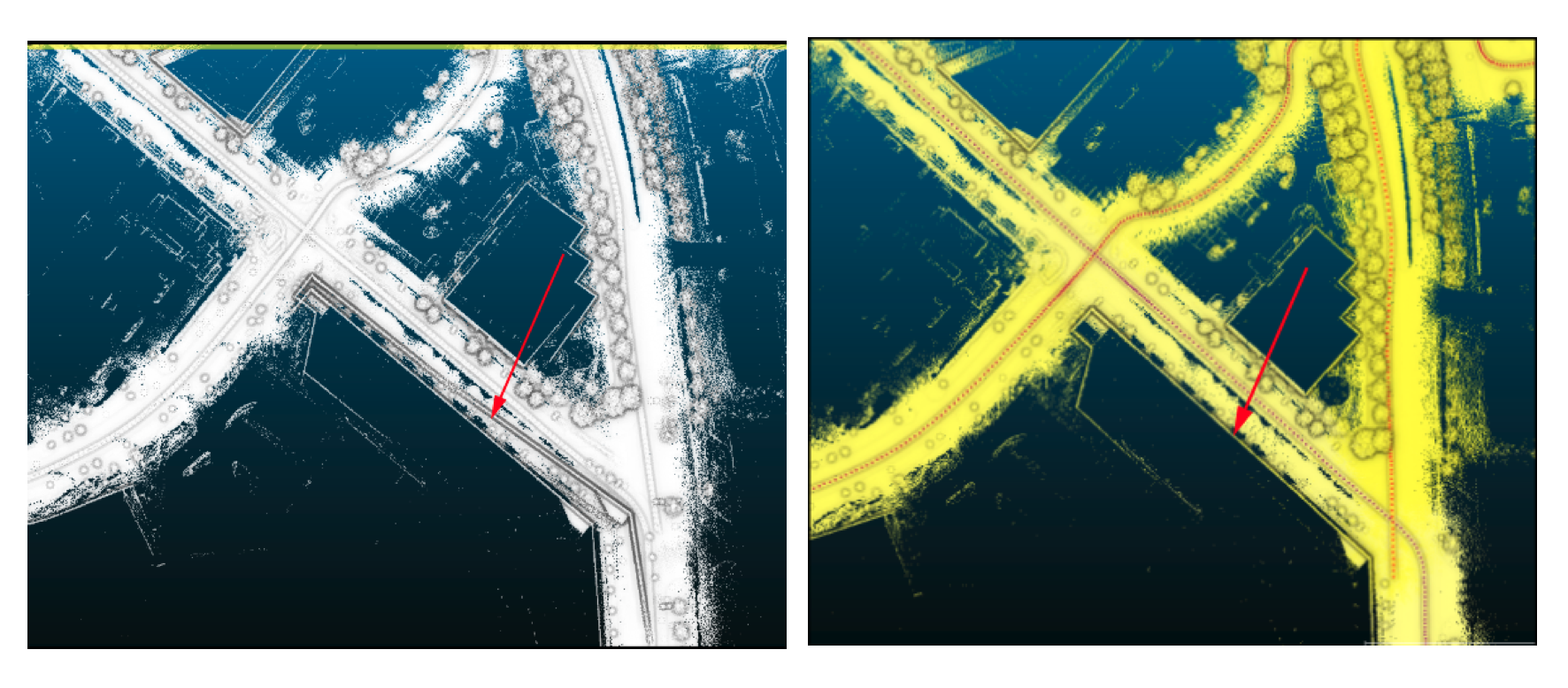

(left) Online SLAM exibits drift during capture, notice the double wall. (right) Offline SLAM, ExSLAM, refines the map.

(left) Online SLAM exibits drift during capture, notice the double wall. (right) Offline SLAM, ExSLAM, refines the map.

We're always testing new and interesting ways to create more accurate and visually captivating maps from our data sets. We're in the process of finalizing a colorization pipeline that will overlay RGB data to create realistic 3D models for digital twinning. And while the crux of our autonomy & mapping isn't reliant on GPS or existing infrastructure, we're testing how we could use GPS data in post-processing to better constrain maps with their ground truths.

Interested in seeing our 3D SLAM pipeline in action? You can request a personalized demo today.